Understanding Overfitting and How to Prevent It

Understanding Overfitting and How to Prevent It

Alexandra Twin has 15+ years of experience as an editor and writer, covering financial news for public and private companies.

What Is Overfitting?

Overfitting is a modeling error in statistics that occurs when a function is closely aligned to a limited set of data points. The model is useful only for its initial data set, not for any other data sets.

Overfitting the model involves making an overly complex model to explain idiosyncrasies in the data. However, data often has some degree of error or random noise. Trying to make the model conform closely to slightly inaccurate data can lead to substantial errors and reduce its predictive power.

Key Takeaways

– Overfitting is an error in data modeling when a function aligns too closely to a limited set of data points.

– Financial professionals are at risk of overfitting a model based on limited data, resulting in flawed results.

– Overfitting compromises the model’s value as a predictive tool for investing.

– A data model can also be underfitted, meaning it is too simple with too few data points to be effective.

– Overfitting is a more frequent problem than underfitting and typically occurs when trying to avoid it.

Understanding Overfitting

A common problem is using computer algorithms to search extensive databases of historical market data for patterns. Elaborate theorems can be developed that appear to predict returns in the stock market with close accuracy.

However, when applied to data outside of the sample, such theorems may prove to be merely the overfitting of a model to chance occurrences. It is important to test a model against data outside of the sample used to develop it.

How to Prevent Overfitting

Ways to prevent overfitting include cross-validation, where the data for training the model is divided into folds or partitions and the model is run for each fold. The overall error estimate is then averaged. Other methods include ensembling, data augmentation, and data simplification to streamline the model and avoid overfitting.

Financial professionals must always be aware of the dangers of overfitting or underfitting a model based on limited data. The ideal model should be balanced.

Overfitting in Machine Learning

Overfitting is also a factor in machine learning. It can occur when a machine has been taught to scan for specific data one way, but the results are incorrect when applied to a new set of data. This is due to errors in the built model, which could be overly complicated and ineffective.

Overfitting vs. Underfitting

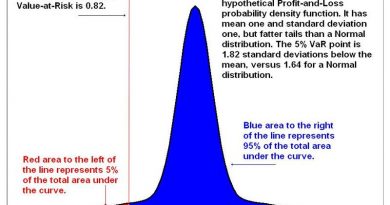

A model that is overfitted may be too complicated and ineffective. Conversely, a model can be underfitted, meaning it is too simple with too few features and data. An overfit model has low bias and high variance, while an underfit model has high bias and low variance. Adding more features to a too-simple model can help limit bias.

Overfitting Example

For example, a university experiencing a high college dropout rate wants to create a model to predict graduation likelihood.

The university trains a model with a dataset of 5,000 applicants and their outcomes. It predicts the outcome with 98% accuracy for the original dataset. However, when tested on a second dataset of 5,000 applicants, the model is only 50% accurate. This is because the model was too closely fit to the first 5,000 applications, a narrow data subset.