What Is a Confidence Interval and How Do You Calculate It

What Is a Confidence Interval and How Do You Calculate It?

What Is a Confidence Interval?

A confidence interval refers to the probability that a population parameter will fall between a set of values for a proportion of times. Analysts use confidence intervals that contain either 95% or 99% of expected observations. If a point estimate is generated from a statistical model of 10.00 with a 95% confidence interval of 9.50 – 10.50, it means we are 95% confident that the true value falls within that range.

Key Takeaways

– A confidence interval displays the probability that a parameter will fall between a pair of values around the mean.

– Confidence intervals measure the degree of uncertainty or certainty in a sampling method.

– They are used in hypothesis testing and regression analysis.

– Statisticians often use p-values in conjunction with confidence intervals to gauge statistical significance.

– They are most often constructed using confidence levels of 95% or 99%.

Understanding Confidence Intervals

Confidence intervals measure the degree of uncertainty or certainty in a sampling method. They can take any number of probability limits, with the most common being a 95% or 99% confidence level. Confidence intervals are conducted using statistical methods, such as a t-test.

Statisticians use confidence intervals to measure uncertainty in an estimate of a population parameter based on a sample. For example, a researcher selects different samples randomly from the same population and computes a confidence interval for each sample to see how it may represent the true value of the population variable. Some intervals include the true population parameter and others do not.

A confidence interval is a range of values, bounded above and below the statistic’s mean, that would likely contain an unknown population parameter. Confidence level refers to the percentage of probability, or certainty, that the confidence interval would contain the true population parameter when you draw a random sample many times.

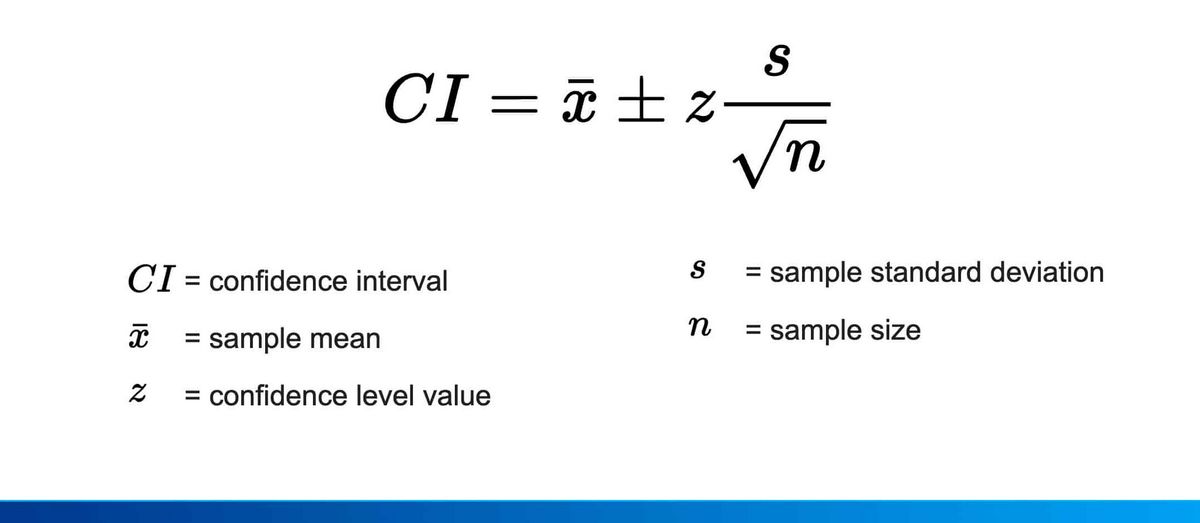

Calculating Confidence Intervals

Suppose researchers are studying the heights of high school basketball players. They take a random sample from the population and establish a mean height of 74 inches.

The mean of 74 inches is a point estimate of the population mean. However, a point estimate by itself is of limited usefulness because it does not reveal the uncertainty associated with the estimate. Confidence intervals provide more information than point estimates. By establishing a 95% confidence interval using the sample’s mean and standard deviation, the researchers arrive at an upper and lower bound that contains the true mean 95% of the time.

If the researchers want even greater confidence, they can expand the interval to a 99% confidence level. Doing so creates a broader range. Establishing the 99% confidence interval as being between 70 inches and 78 inches means that one can expect 99 of 100 samples evaluated to contain a mean value between these numbers.

A 90% confidence level implies that 90% of the interval estimates should include the population parameter.

What Does a Confidence Interval Reveal?

A confidence interval is a range of values, bounded above and below the statistic’s mean, that would likely contain an unknown population parameter. Confidence level refers to the percentage of probability, or certainty, that the confidence interval would contain the true population parameter when you draw a random sample many times.

Why Are Confidence Intervals Used?

Statisticians use confidence intervals to measure uncertainty in a sample variable. For example, a researcher selects different samples randomly from the same population and computes a confidence interval for each sample to see how it may represent the true value of the population variable. Some intervals include the true population parameter and others do not.

What Is a Common Misconception About Confidence Intervals?

The biggest misconception regarding confidence intervals is that they represent the percentage of data from a given sample that falls between the upper and lower bounds. It would be incorrect to assume that a 99% confidence interval means that 99% of the data in a random sample falls between these bounds. What it actually means is that one can be 99% certain that the range will contain the population mean.

What Is a T-Test?

Confidence intervals are conducted using statistical methods, such as a t-test. A t-test is a type of inferential statistic used to determine if there is a significant difference between the means of two groups. Calculating a t-test requires the mean difference, standard deviation of each group, and the number of data values of each group.

How Do You Interpret P-Values and Confidence Intervals?

A p-value is a statistical measurement used to validate a hypothesis against observed data. A p-value less than 0.05 is considered statistically significant, indicating that the null hypothesis should be rejected. This corresponds to the probability that the null hypothesis value is contained within a 95% confidence interval.

The Bottom Line

Confidence intervals allow analysts to understand the likelihood that the results from statistical analyses are real or due to chance. When trying to make inferences or predictions based on a sample of data, there will be some uncertainty as to whether the results actually correspond with the real-world population being studied. The confidence interval depicts the likely range within which the true value should fall.